Lecture 3

LANGUAGE AND THE BRAIN / MIND

Plan

1. Cognitive studies of language as a part of cognitive science

2. Neurolinguistics, psycholinguistics, and cognitive linguistics

3. NEUROLINGUISTICS

3.1. Fields of research

3.2. Language areas. Aphasias

3.3. Linguistic function in the brain

4. PSYCHOLINGUISTICS

4.1. Child language

4.2. Language of adults

5. COGNITIVE LINGUISTICS

5.1. The internalized language: formal and semantic aspects

(cognitive linguistics vs. generative grammar)

5.2. Objectives, data, and methodology of cognitive linguistics.

Conceptual analysis

5.3. Conceptual analysis vs. semantic analysis

5.4. Conceptual structures and cognitive structures

Cognitive map

| |||

|

|

| |||

| |||

|

Cognitive studies of language aim to expose how language is represented in the human brain and mind. These studies are performed by neurolonguistics, psycholinguistics, and cognitive linguistics that, taken together, make up a constitutive part of cognitive science. The latter is a federation of various disciplines which attempt to explain how the brain and mind of humans and other living organisms work with information. While solving this problem, each discipline focuses on particular data and applies specific techniques of investigation. Cognitive studies of language focus on that information which can be represented with various linguistic signs.

The disciplines that form cognitive science can be subdivided into those which are theoretically and technologically oriented. (1) The disciplines pursuing the theoretical goal of understanding how the brain and mind acquire, process, store, and retrieve information, are: neuroscience and neurolinguistics, psychology, psycholinguistics and cognitive linguistics, anthropology, philosophy, semiotics, and logic. (2) The disciplines pursuing the technological goal of developing theories applicable in simulating the workings of the brain/mind in the computer, are: the systems theory (the study of systems in general), computer science (the study of the structure, expression, and mechanization of the algorithms that underlie different aspects of the work with information), information theory (the study of quantification of information), Artificial Intelligence theory (the study of computers and computer software capable of intelligent behavior), and knowledge engineering (that refers to all technical, scientific and social aspects involved in building, maintaining and using knowledge-based systems). Both groups constantly interact: they share methodologies, and use the insights of one another in exploring the phenomenon of the brain/mind (Fig. 3.1).

|

|

|

|

| |||||

| |||||

|

Within the interdisciplinary paradigm of cognitive science, cognitive studies of language play one of the most conspicuous roles because language, as a semiotic system used in human cognition and communication, immediately relates to information, and thus it provides the researchers with lots of empirical data.

|

The three cognitive aspects of language studies Ц neurolinguistics, psycholinguistics, and cognitive linguistics Ц being interrelated, remain however individual fields.

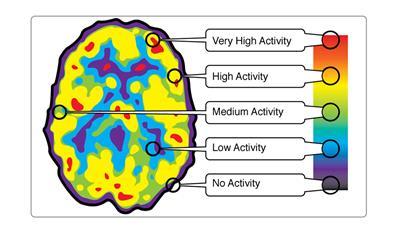

Neurolinguistics centers on the problem Сlanguage and the brainТ. Neurolinguistics investigates the basis in the human nervous system for language development and use. It specifically aims to construct a model of the brainТs control over the processes of speaking, listening, reading, writing, and signing. The ultimate objective is the BRAIN which is accessed through language (language à BRAIN). The data are collected from observing normal speech and speech deviating from the norm (language pathology). Contemporary studies of the brainТs activity use methods which employ new brain imaging technologies, among them positron emission tomography /PET/, magnetic resonance imaging /MRI/, functional magnetic resonance imaging /fMRI/, and magneto-encephalography), which can highlight patterns of brain activation as people engage in various language tasks (Fig. 3.2).

| |||

|

Psycholinguistics and cognitive linguistics focus on the problem Сlanguage and the mindТ. Psycholinguistics is an experimental science. It studies the correlation between linguistic behavior and the mental processes and skills that underlie this behavior. It attempts to find the linguistic evidence for supporting the psychological theories as to how the mind works when people produce and comprehend speech. Thus, the ultimate objective of psycholinguistics is the MIND (language à MIND). In this respect, psycholinguistics is close to psychology. Besides, they both apply similar research methods grounded on observations and experiments, and similar data obtained from observingthe actual speech behavior of adults and children.

Cognitive linguistics is a theoretical science. It aims to find the psychological (cognitive) evidence for explaining the phenomena of language and speech, so its ultimate objective is the LANGUAGE and its construal influenced by the mindТs workings (mind à LANGUAGE). Therefore, cognitive linguistics, as well as psycholinguistics, is concerned with exposure of the mental structures and cognitive operations that underlie language and speech. The methods used in cognitive linguistics (conceptual analysis in its various forms) are an extension of linguistic methods, especially those developed in structural semantics. The analyzed data are mostly represented by the systems of different languages, and by texts as the СproductsТ of speech. Theoretical assumptions formulated by cognitive linguistics are grounded on its own findings, the insights of other linguistic fields, and the data obtained by various disciplines contributing to cognitive science.

|

|

|

The coining of the term "neurolinguistics" has been attributed to Harry Whitaker, who founded the Journal of Neurolinguistics in 1985.As an interdisciplinary field, neurolinguistics draws methodology and theory from fields such as neuroscience, neurobiology, neuropsychology, cognitive psychology, linguistics, communication disorders, computer science an others. Much work in neurolinguistics is focused on investigating how the brain can implement the processes that theoretical linguistics and psycholinguistics consider to be necessary in producing and comprehending language.

Fields of research

Neurolinguistics research is carried out in all the major areas of linguistics. The main linguistic subfields and the ways neurolinguistics addresses them are given in the table below.

| Subfield | Description | Research questions in neurolinguistics |

| Phonetics | the study of speech sounds | how the brain extracts speech sounds from an acoustic signal, how the brain separates speech sounds from background noise |

| Phonology | the study of how sounds are organized in a language | how the phonological system of a particular language is represented in the brain |

| Morphology and lexicology | the study of how words are structured and stored in the mental lexicon | how the brain stores and accesses words that a person knows |

| Syntax | the study of how multiple-word utterances are constructed | how the brain combines words into constituents and sentences; how structural and semantic information is used in understanding sentences |

| Semantics | the study of how meaning is encoded in language |

Neurolinguistics investigates several major topics, including (a) where language information is processed, (b) how language processing unfolds over time, and (c) how brain structures are related to language acquisition and learning. Research in the first language acquisition has already established that infants from all linguistic environments go through similar and predictable stages, and some neurolinguistics studies attempt to find correlations between stages of language development and stages of brain development, while other studies address the problem of the physical changes (known as neuroplasticity) that the brain undergoes during second language acquisition, when adults learn a new language. One of the highlights of neurolinguistics is speech and language pathology related to the language areas in the brain and considered by aphasiology.

Language areas. Aphasias

|

|

The findings of Broca and Wernicke established the field of aphasiology and the idea that language can be studied through examining physical characteristics of the brain. Initial research in aphasiology also benefited from the early 20th-century work of Korbinian Brodmann, who made a СmapТ of the brainТs surface dividing it up into numbered areas based on each area's cell structure and function; these areas, known as Brodmann areas, are still widely used in neuroscience today.

|

|

|

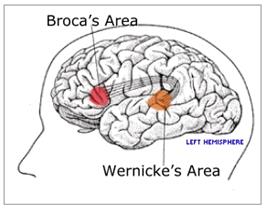

BrocaТs and Wernicke's areas are specialized for different linguistic tasks. Broca's area handles the motor production of speech, and Wernicke's area handles auditory speech comprehension. Damage of these and some other areas causes different kinds of aphasia.

Ј BrocaТs aphasia influences speech production, while comprehension of speech may be unimpaired. This aphasia type is characterized by slow, effortful speech, because of which it is also called expressive aphasia. In speech mostly content words (nouns, verbs, and adjectives) are produced; function words (determiners, prepositions, pronouns) are often omitted. Thus, the speech appears to be without grammar (agrammatism). This speech style is called telegraphic speech. In many speakers with BrocaТs aphasia, articulation is affected as well, because the brain site responsible for articulation is close to BrocaТs area. Comprehension of spoken language is relatively good: in conversations, speakers with BrocaТs aphasia understand what is said. When tested for the comprehension of complex syntactic structures, the performance drops dramatically. It is therefore assumed that in BrocaТs aphasia the grammatical component of language is affected. Reading and writing are also impaired, comparable to oral comprehension and production

Ј WernickeТs aphasia is characterized by difficulty in comprehending speech, and by the production of speech which is fluent but empty of meaning (fluentaphasia). Speech production is fluent and well-articulated, but the aphasic speaker produces sound and word substitutions, so-called СparaphasiasТ, for example С spool Т for С spoon Т or С motherТ for С wifeТ. Distortions of words may be further away from the target words than in these examples, and as a consequence the listener may not recognize existing words; these sound strings are called СneologismsТ, for example, С stroofelТ. When many of these words are produced, the text will no longer be understandable, which is referred to as СjargonТ or Сword saladТ. Comprehension of language is moderately to severely impaired. The combination of a severe comprehension and production disorder results in poor communication abilities. Writing and reading are parallelly affected.

Ј Anomic aphasia has no clear lesion site in the brain that is responsible for this aphasia type, but the lesions (damages) are usually found in the temporal and/or parietal lobe (скронева та/або т≥м'€на частка). Anomic aphasia is characterized by word-finding problems. The oral output of a speaker with anomic aphasia is basically fluent, but due to the pauses that arise from the word-finding problems, speech rate may be low. The word-finding problems are not only recognizable by pauses, but also by the use of less specific words, such as С thingТ, С doТ, С hereТ, С thereТ. The latter is called Сempty speechТ. Comprehension of spoken language is relatively good, although complex commands are difficult to understand. Reading is usually spared as well, but in writing the word-finding problems show up again.

Ј Conduction aphasia was first described by Carl Wernicke. The name of the syndrome derives from the fact that it is caused by a lesion in the tract that connects WernickeТs and BrocaТs area. It is characterized by the production of sound errors, for example, С scrampleТ for С scrabbleТ. The patient is well aware of his errors and this results in many self-corrections, for example, С scramble, no strapple, no stample ЕТ. The errors are particularly prominent when the patient is asked to repeat longer words and sentences. Comprehension of spoken and written language is relatively good, but impaired for complex and long sentences. In writing, a similar error pattern is shown as in speaking.

|

|

|

Ј Global aphasia is caused by the lesion which is often considerable and extends to both the frontal, temporal, and parietal lobes (лобноњ, скроневоњ ≥ т≥м'€ноњ часток). All language modalities are severely impaired. Spoken output is often restricted to one- or two-word utterances, sometimes only С yesТ and С noТ, not always used correctly. Comprehension is severely impaired and reading and writing are hardly possible. This, of course, results in very poor communication abilities.

Although this classification has contributed considerably to the understanding of aphasia, most patients suffer from a СmixedТ form of aphasia, which cannot be labeled with any of the types mentioned above.

Linguistic function in the brain

Before the 19th century, the common theory of brain function assumed that the brain was not a structure made up of discrete independent centers; each specialized for different functions, but must be looked upon as a single working unit. This theory was termed С holismТ. However, at the beginning of the 19th century, scientists began to assign function to certain neuronal structures and started to favor the theory of localization of function within the brain, which in particular was grounded on the discovery of BrocaТs and WernickeТs areas. Wernicke, who was neither a pure localizationist nor a pure holist, developed an elaborate model of language processing, where he proposed that only basic perceptual and motor activities are localized to single cortical areas, and more complex intellectual functions result from interconnections between these functional sites. He stated that different components of a single behavior are processed in a number of different regions of the brain and thus advanced the first evidence for the idea of distributed processing and connectionism. In the first part of the 20th century, the idea of functional segregation fell into disrepute, and was replaced by the proposal that higher cognitive abilities depended on the function of the brain as a whole. In contrast, the situation concerning memory and language was still an active battleground for the opponents and defenders of localization.

Until the 1960s, most of the information about the localization of linguistic function was based primarily on patients with brain lesions. In this case, the language deficits resulting from brain injuries have been compared to the areas of the brain which became lesioned. However, even if the lesion can be accurately located, the function that is examined after injury does not reflect the simple equation of the normal function missing, but it represents a new state of reorganization of the brain. In the 1960s, the American neurologist Norman Geschwind (1926Ц1984) refined WernickeТs model of language processing, and this so-called WernickeЦGeschwind model still forms the basis of current investigations on normal and disturbed language function. Based on data from a large number of patients, Geschwind argued that regions of the parietal, temporal, and frontal brain lobes (т≥м'€ноњ, скроневоњ та лобовоњ часток мозку) were critically involved in human linguistic capacities. This model holds that the comprehension and formulation of language are dependent on WernickeТs area, after which the information is transmitted to BrocaТs area where it can be prepared for articulation.

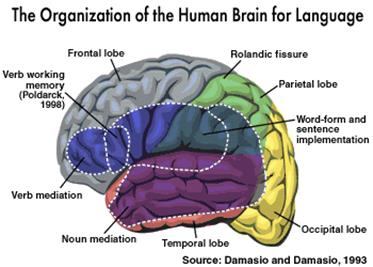

The further expansion and improvement of WernickeЦGeschwind model of language processing within the brain has been made possible by the application of advanced neurophysiological methods (PET and fMRI in particular) which contributed interesting localization information, and provided evidence that a large region of the left hemisphere is clearly involved in language production and comprehension and that language localization varies from patient to patient. Contemporary studies are offering new data on what brain areas might actually do and how they might contribute to a network of neuronal structures that collectively participate in language processing. The results obtained by neurophysiologic methods support the notion that besides the classical BrocaТs and WernickeТs areas, several additional distributed cortical and subcortical (коркових ≥ п≥дкоркових) neuronal structures of both hemispheres clearly make a significant contribution to language function (Fig. 3.4).

|

|

|

| |||

|

Most of the areas consistently activated by linguistic processing lie within the left temporal, parietal, and frontal brain lobes (л≥воњ скроневоњ, т≥мТ€ноњ та лобовоњ часток мозку) and partly in right hemispheric areas. Extended areas in the left temporal cortex (л≥воњ скроневоњ кори) are engaged in word retrieval, naming, morphosyntactic processing, parsing, syntactic comprehension and semantic analysis, and also in the articulation of speech. Moreover, several areas in the left frontal cortex (л≥воњ лобноњ кори) including BrocaТs area are found to be concerned with word retrieval, verbal working memory, syntactic processing, semantic encoding and retrieval, and also articulation, comprehension, and global attentional or executive functions. Right hemispheric cortical areas (зони кори правоњ п≥вкул≥) that correspond to BrocaТs and WernickeТs areas in the left hemisphere are associated with prosodic elements that impart additional meaning to verbal communication and additional linguistic functions that have not been clearly defined up to now. Moreover, the cerebellum (мозочок), and subcortical regions (п≥дкорков≥ зони) like the basal ganglia (базальн≥ гангл≥њ) and especially the thalamus (таламус), which is presumably a lexical-semantic interface, may not only play supportive roles during linguistic processing.

The fact that there are so many new brain regions emerging in modern lesion and functional imaging studies of language suggests that the classical Wernicke-Geschwind model, although useful for so many years, has now been seen to be oversimplified. Areas all over the brain are recruited for language processing; some are involved in lexical retrieval, some in grammatical processing, some in the production of speech, and some in attention and memory. These new findings are still too fresh for any overarching theories that might explain how these areas interact. However, there is a definite need for hybrid models of language processing. Language is both localized and distributed, which means that specific language processing operations are carried out in particular brain locations organized in a distributed fashion.

Psycholinguistics studies the mental mechanisms of language processing Ц speaking, listening, reading, and writing Ц both in a native and a second tongue. Psycholinguistics also studies the processes underlying acquisition of language, how language processes break down in language pathologies such as dyslexia (inability to read) and aphasia, and how these processes relate to brain function. Therefore, psycholinguistics overlaps with neurolinguistics.

The mind cannot be directly observed, so psycholinguists have to devise ways of finding out how it works. They get their evidence from two main sources: observation of spontaneous utterances, and psycholinguistic experiments. Since spontaneous utterances of ordinary speech are somewhat messy, in that there are dozens of different factors which have to be taken into account when utterances are analyzed, psycholinguists have to devise experi≠ments in which the number of variable factors can be controlled, and the results can be accurately measured. But this type of methodology presents a problem, sometimes called the 'experimental paradox Т. The more carefully an experiment is devised so as to limit variables, the more subjects are put into an unnatural situation, in which they are likely to behave oddly. On the other hand, the more one allows a situation to be like 'real life', the less one is able to sort out the various interacting factors. Ideally, major topics should be tackled both by observing sponta≠neous speech and by devising experiments. And when the results coincide, this is a sign that progress is being made.

The three СcoreТ psycholinguistic topics are: Сhow humans acquire languageТ, how humans comprehend speechТ, Сhow humans produce speechТ. The first topic requires psycholinguistic evidence from child language, the other two topics involve the evidence mostly obtained from the language of adults.

4.1. Child language

Language acquisition by a child concerns such focal issues as (a) СgrowingТ, or development of child language, (b) origin of language, (c) the rule-governed nature of child language, and (c) the way a child learns the meaning of words.

(a) СGrowingТ of child language. Language is both nature and nurture, i.e. humans are naturally Сpre-programmedТ to speak, but their latent language faculty is activated only if a child is exposed to actual input from a given language. Although the environments in which children acquire their language vary, they all go through similar stages in language acquisition that evolve during the same general time frame. Human infants pay attention to language from birth. They produce recognizable one-word utterances at around 12-15 months (girls may have an earlier start at 9 months). Their two-word utterances with content words such as nouns and verbs appear at around 18 months. Function words like С willТ and С myТ tend to appear after 24 months. And by the age of 3 years a child usually acquires the basic syntactic structures of the input language. Therefore, language has all the hallmarks of maturationally controlled behavior.

The goal of acquisition theories is to explain how it is that any normal child, born into any linguistic community, learns the language (or languages) of that community. For many theorists, the challenge is also to explain: (a) what appears to be the relatively short time period in which language acquisition is achieved, (b) the fact that language is acquired without either overt teaching or sufficient information from the input (what the child hears), and (c) to follow a path that seems remarkably similar in all children, despite variation in early childhood experiences and in the types of languages they are exposed to. There is also a consensus that language acquisition is largely independent of cognitive development, despite the fact that some deficits in cognitive development can have an effect on certain aspects of language development. Whether the language is a spoken language or a sign language, whether the language is highly inflected like Finnish or uninflected like Mandarin, whether the child is raised in poverty or luxury, by highly educated or illiterate adults, or even other children, it seems that normally developing children pass through roughly the same stages in the same sequence, and achieve the steady state of acquired language by about the same age.

The evidence fromdevelopment of a childТs language strongly suggests that there is a Сcritical periodТ for the first language acquisition, when the mechanism or mechanisms responsible for language development is/are primed to receive input. After the critical period, a person is unable to acquire language properly, which causes his/her mental deficiencies. The evidence is provided by the unfortunate natural experiments in which children were raised in isolation (or near isolation) from language-using older members of our species (i.e. they were severely neglected or were raised by other animals). With regard to the second language acquisition, the critical period is defined as Сsensitive periodТ, during which a second language, as well as the first one, is learned by a child effortlessly and without instruction. The critical / sensitive period lasts from birth till 8/12 years of age (some scholars restrict it to 5 years of age and relate it to the period of lateralization, or formation of the basic neuron networks and specialization of the brainТs hemispheres). This period, in its turn, is further subdivided into sub-periods. The main ones are two: the period till 2/5 years is critical / sensitive for speech sounds (phonetics), and the period till 8/12 years is important for grammar. There is significant debate surrounding the issue of whether, when individuals learn a second language, they go about it in the same way as a first language learner, or whether it is a fundamentally different process. Evidence suggests that during the sensitive period children seem to be able to do acquite the second language much better than adolescents and adults.

Therefore, natural linguistic endowment and linguistic environment are both necessary for in language development. However, theories of language acquisition are distinguishable in terms of the relative contributions of these two aspects as the constituents of language СoriginТ.

(b) Origin of language. Some scholars emphasizethe social aspect of language, and the role of parents. Children, they argue, are social beings who have a great need to interact with those around them. Furthermore, all over the world, child-carers tend to talk about the same sort of things, chatting mainly about food, clothes and other objects in the immediate environ≠ment. Motherese or caregiver language has fairly similar characteris≠tics almost everywhere: the caregivers slow down their rate of speech, and speak in slow, well-formed utterances, with quite a lot of repetition. People who stress these social aspects of language claim that there is no need to search for complex innate mechanisms: social interaction with caring caregivers is sufficient to cause language to develop. This latter view is turning out to be something of an exaggeration. The fact that parents make it easier for children to learn language does not explain why they are so quick to acquire it: intelligent chimps exposed to intensive sign language rarely get beyond 200 words and two-word sequences. Although children do not hear examples of every possible structural pattern, they nonetheless attain a grammar capable of generating all the possible sentences in their language (known as the poverty of the stimulus argument). And, although each is exposed to different data and in a different order, they all end up with the same basic grammar for their language, which would be unexpected under an imitative account. Less radical researchers, recognizing the role of inborn language faculty (nature) consider it to be less important than the role of input (nurture).

Another dimension of difference between theories concerns the innate aspects of language acquisition. This difference is known as content-process controversy. The realization that language is maturationally controlled means that most psycholinguists agree that human beings are innately pro≠grammed to speak. But they cannot agree on exactly what is innate: they cannot decide to what extent (if any) language ability is separate from other cognitive abilities.

There are those researchers, most notably the generative linguists in a broadly Chomskyan paradigm, who argue for a dedicated language acquisition device (LAD), or the Universal Grammar that has evolved to serve the precise purpose of language acquisition. This device is primed specifically to receive linguistic input, and requires a minimal amount of it to set to work building mental representations for language in the mind of the child. The fact that the required triggering input is so minimal provides an explanation for the consistency of language acquisition paths across otherwise fairly widely varying life experiences. In other words, uniformity of speech development indicates that children innately contain a blueprint for language: this view represents a so-called con≠tent approach.

Despite its power within linguistics, accounts grounded on the UG story actually attract only a minority of adherents within the broad field of child language research. Most researchers are convinced that language in its entirety can be worked out by the child on the basis of the input coupled with innate (nonspecific) predispositions for analyzing their environment. Children are innately geared to processinglinguistic data, for which they utilize a puzzle-solving ability which is closely related to other cognitive skills. The ability to produce and comprehend language is just one of such skills. This conception is known as a process approach.

The whole controversy is far from being solved, though psycho≠linguists hope that the increasing amount of work being done on the acquisition of languages other than English may shed more light on the topic. Some evidence relevant for it is provided by the rule-governed nature of child language.

(c) Rules of child language. Instead of learning language by imitating those around them, children create their own grammars. From the moment they begin to talk, children seem to be aware that language is rule-governed, and they are engaged in an active search for the rules which underlie the language to which they are exposed. Child language is never at any time a haphazard conglomeration of random words, or a sub-standard version of adult speech. Instead, every child at every stage possesses a grammar with rules of its own even though the system will be simpler than that of an adult. For example, children tend:

Ј to use negativesin front of the sentence: No play that. No Fraser drink all tea;

Ј to use the negative after the first NP: Doggie no bite. That no mummy;

Ј to use question word in front of a sentence: What mummy doing? What kitty eating?

Ј to form linguistic expressions by analogy, which results in the forms like mans, foots, gooses. Such plurals occur even when a child understands and responds correctly to the adult forms men, feet, geese. This is clear proof that children's own rules of grammar are more important to them than mere imitation.

The new constructions of child language appear at first in a limited number of utterances (What mummy doing? What daddy doing? What Billy doing?), and then gradually extend to other utterances (What kitty eating? What mummy sewing?).

Language acquisition is not as uniform as was once thought. Different children use different strategies for acquiring speech. For example, some seem to concentrate on the overall rhythm, and slot in words with the same general sound pattern, whereas others prefer to deal with more abstract slots.

(d) Learning word meanings. The most obvious semantic deviations of child language from the language of adults are undergeneralization and overgeneralization.

Undergeneralization means that a child associates word meanings with particular contexts; e.g. the word white is associated only with snow, but not with a piece of paper or color of a dress; the word car points to a toy, but not to a real car. The phenomenon of undergeneralization, which is caused by lack of abstraction in ontogenesis, is also found in phylogenies, when a language has names for, say, particular shades of red, and has no word for СredТ as a general hue that subsumes the shades.

Overgeneralization means that a child extends the meaning of a word from Сa kindТ to Сthe typeТ; e.g. youngsters may call any kind of small thing Сa crumb Т: a crumb, a small beetle, or a speck of dirt; or they may apply the word Сmoon Тto any kind of light. Children's overgeneralizations are often quite specific, and quite odd because in categorization they may Сwork from a prototypeТ that differs from that of adults.

In the course of language acquisition, overgeneralization along with generalization allows new knowledge to permeate across-the-board (from semantics to syntax and vice versa). This phenomenon of СbootstrappingТ allows learning in one area of language to trigger new learning in another. Semantic bootstrapping involves understanding an utterance in context and using it in conjunction with innate expectations of language principles to crack the code of the syntax. For example, a child who does not yet know the required complements of the verb С putТ, can work these out from understanding utterances such as СPut the cup on the tableТ in context. Syntactic bootstrapping involves working from an already understood structure to fill in meanings, semantic information, by deduction. For example, if you hear С John glopped his friend on the head and he fell downТ, you may not know exactly what С gloppedТ means, but you can work out a lot of what it must mean.

(+ Aitchison, p. 121-126)

4.2. Language of adults

Psycholinguistic studies of adultТs language consider various aspects of speech production and comprehension. Speech comprehension can be illustrated by (a) recognizing words and (b) understanding syntax, and speech production Ц by (c) slips of the tongue.

(a) Recognizing words. Understanding language is an active,nota passive process. Hearers jump to conclusions on the basis of partial information. This has been demonstrated in various experiments. For example, listen≠ers were asked to interpret the following sentences, in which the first sound of the final word was indistinct: Paint the fence and the ?ate. Check the calendar and the ?ate. Here's the fishing gear and the ?ate. The subjects claimed to hear gate in the first sentence, date in the sec≠ond, and bait in the third. Another example is the text below which appeared in the Internet as a joke and which poses no problem for its understanding:

Since recognizing words involves quite a lot of guesswork, how do speakers make the guesses? Suppose someone had heard 'She saw a do -'. Would the hearer check through the possible candidates one after the other, dog, doll, don, dock, and so on (serial processing)? Or would all the possibilities be considered subconsciously at the same time (parallel processing)? The human mind, it appears, prefers the second method, that of parallel processing, so much so that even unlikely possibilities are probably considered subconsciously. A recent interactive activation theory suggests that the mind is an enormously powerful network in which any word which at all resembles the one heard is automatically activated, and that each of these triggers its own neighbors, so that activation gradually spreads like ripples on a pond. Words that seem particularly appropriate get more and more excited, and those which are irrelevant gradually fade away. Eventually, one candidate wins out over the others.

(b) Understanding syntax. The process of understanding syntax is similar to that of word recognition: people look for outline clues, and then actively reconstruct the probable message from them. In linguistic terminology, hearers uti≠lize perceptual strategies. They jump to conclusions on the basis of outline clues by imposing what they expect to hear onto the stream of sounds. Such conclusions may rely on syntactic structures or lexical items.

Ј Syntactically grounded conclusions are exemplified by application of parsing (analysis of a sentence in terms of grammatical constituents) to garden-path sentences (Сgarden pathТ refers to the saying С to be led down the garden path Т, meaning Сto be deceived, tricked, or seducedТ). Such sentences or phrases are grammatically correct, but they start in such a way that a readerТs most likely interpretation grounded on a regularly used pattern will be incorrect. This pattern will be the wrong parse (a structural element) that turns out to be a dead end. It should be noted that simple ambiguity does not produce a garden path sentence; rather, there must be an overwhelmingly more common meaning associated with the early words in a sentence than is involved in a correct understanding. The examples of garden-path sentences are:

Anyone who cooks ducks out of the washing-up.

(Anyone who cooks ducks)? out of the washing-up.

(Anyone who cooks) (ducks out of /=avoids/ the washing-up).

The old man the boat.

(The old man)? the boat.

(The old) (man the boat)

The government plans to raise taxes were defeated.

((The government) plans to raise taxes)? were defeated.

(The government plans) (to raise taxes) (were defeated).

Ј Lexically grounded conclusions are exemplified by variable interpretation of the lexical items. E.g. in the sentence С Clever girls and boys go to university Тpeople usually assume that С clever Тrefers both to girls and boys. But in С Small dogs and cats do not need much exercise Т the word СsmallТ is usually taken to refer to the dogs alone.

Among the disputed questions concerned with understanding syntax is coping with СgapsТ, or СtracesТ left by a word which has been raised to the front of a sentence; e.g. Which box did Bill put in his pocket? The hearer is expected to understand that the СgapТ to be filled in the answer to this question is after the verb put. The relationship between lexical items, the syntax, and the overall context therefore is still under discussion.

(c) Slips of the tongue. Speech production involves at least two types of process. On the one hand, words have to be selected. On the other hand, they have to be inte≠grated into the syntax. Both processes are exposed in slips of the tongue, cases in which the speaker accidentally makes an error. There are two main kinds of slips: selection and assemblage errors.

In selection errors, the speaker picks out a wrong item from the group of items similar in their meaning or form. E.g. meaning: Please hand me the tin-opener (nut-crackers); He's going up town (down town); That's a horse of another color (of another race); form: Your seat's in the third component (compartment). Don't consider this an erection on my part (a rejection on my part). Selection errors usually involve lexical items, so they can tell us which words are closely associated in the mind. For example, peo≠ple tend to say knives for 'forks', oranges for 'lemons', left for 'right', suggesting that words on the same general level of detail are tightly linked, especially if they are thought of as a pair. Similar sounding words which get confused tend to have similar begin≠nings and endings, and a similar rhythm, as in antidote for 'anec≠dote', confusion for СconclusionТ. Therefore, it isn't just any word that is substituted, but the one that is related in meaning or form. Furthermore, nouns are substituted for nouns, verbs for verbs, and prepositions for prepositions. So even if speakers have never had a class in English grammar they must know unconsciously what grammatical classes these words are in. The similarity of these words in sound or meaning suggest that we store words in our mental dictionary in semantic classes (according to their related meanings) and also by their sounds (similar to the spelling sequences in a printed dictionary), which testifies to the existence of paradigmatic patterns.

In assemblage errors, items with similar stress are often transposed, or wrongly assembled. E.g. Dinner is being served at wine (Wine is being served at dinner). A p oppy of my c aper (A c opy of my p aper). Whereas selection errors tell us how individual words are stored and selected, assemblage errors indicate how whole sequences are organized ready for production, which testifies to the existence of entrenched syntactic (synagmatic) patterns. In assemblage errors, mistakes nearly always take place within a single 'tone group' - a short stretch of speech spoken with a single intonation contour. This suggests that the tone group is the unit of planning. And within the tone group, items with similar stress are often transposed, as in A gas of tank (a tank of gas). Furthermore, when sounds are switched, initial sounds change place with other initials, and final with final, and so on, as in R eap of h ubbish (heap of rubbish); Ha ss or gra sh (hash or grass). All this suggests that speech is organized in accordance with a rhythmic principle, that a tone group is divided into smaller units (usually called feet), which are based (in English) on stress. Feet are divided into syllables, which are in turn possibly controlled by a biological 'beat' which regulates the speed of utterance.

Slips of the tongue are part of normal speech. Everybody makes them. At first sight, such slips may seem haphazard and confused. On closer inspection, they show certain regularities, compatible with the principles that underlie the system of language, and the conceptions of its representation in the mind.

Aitchison, pp. 126-131).

Cognitive linguistics focuses on the nature of relation between the Internalized Language (IL), or the form in which language exists in the mind, and Externalized Language (EL) represented by physical, material forms of linguistic expressions. In the West, cognitive linguistics appeared as a conception, opposed to ChomskyТs generative grammar; in Eastern Europe, it logically emerged from onomasiology that studies linguistic meaning in the direction Сfrom the meaning to the form (or forms) that signifies this meaningТ.