После описания проблеммы можно уточнить постановку задачи.

Необходимо реализовать оконное приложение для работы с одним изображением и возможности конвертации его в цветной текст. Для конвертации применятся правило перевода, отображающее входные блоки в символ и цвет, то есть необходимо реализовать два кодировщика.

В качестве кодировщика может служить искусственная нейроная сеть.

Многослойный персептрон с одним скрытым слоем является универсальным аппроксиматором, поэтому данная архитектура подойдет для решения поставленной задачи. Входное изображение будет нарезаться на блоки размером 10x10. Кодировщик текста будет кодировать вещественный массив из 100 значений в один из символов из множества кодируемых символов.

Кодирование цвета будет основываться на понятии моды в статистике.

Мо́да — значение во множестве наблюдений, которое встречается наиболее часто. (Мода = типичность.) Иногда в совокупности встречается более чем одна мода (например: 6, 2, 6, 6, 8, 9, 9, 9, 10; мода = 6 и 9). В применении к цвету необходимо представить множество из 100 цветов в виде вариационного ряда, и отобрать то значение, которое встречается чаще других. В случае присутствия нескольких таких цветов выбрать случайное. Проблема заключается в том, что в общем случае у нас множество из 16777216 цветов если не использовать альфа канал. И для общего случая вероятность того, что хотя бы два цвета совпадут крайне мала. Тем не менее можно свести все множество цветов, например, похожих на красный к одному цвету. Если мы применим это ко всем цветом, можем получить множество из, например, 16 цветов. Тогда можно корректно выбрать наиболее типичный цвет. Существуют алгоритмы уменьшения гаммы изображения, однако в таком случае нет гарантии, что цвета будут совместимы с цветами Windows консоли, а у нас стоит ограничение на именно эти цвета, поэтому необходим кодировщик, который каждый цвет сведет к одному из 16, а затем из множества цветов выберет наиболее типичный.

Доказано, что многослойный персептрон с одним скрытым слоем является универсальным аппроксиматором, что позволит нам реализовать оба кодировщика на данной нейронной сети.

Можно перейти к проблеме составления обучающей выборки. Пространство входных образов содержит 2^100 всевозможных образов. Необходимо определить такой минимальный набор символов, чтобы отобразить максимальное количество символов. В качестве символов будем использовать символы из таблица Ascii. Сложность решения данной задачи определяется тем, что существует очень много образов, которые невозможно отобразить в конкретный символ, например, символы на рис 2.6.

Рисунок 2.6: Однозначно не отображаемые символы.

Поскольку таких символов большинство, их необходимо как-то классифицировать и обрабатывать. Можно выделить два множества: Символы с подавляющим большинством черных пикселов, и с подавляющим большинством белых пикселов.

Поскольку невозможно данные образы однозначно кодировать, зададим для них некоторый определяемый пользователем символ, например для первого типа ‘#’, а для второго типа ‘ ‘. Существуют также образы, которые можно группировать по некоторым закономерностям. Мне удалось выделить следующие группы:

Таблица 1: Множество типовых образов

| Типовой образ | Описание | Символ |

| Закрашена прямоугольная область по середине. | - (тире) |

| Закрашена большая часть образа | Любой символ, определяемый пользователем. Например, # (Решетка) |

| Закрашена прямоугольная часть слева | ((левая скобка) |

| Закрашена прямоугольная часть справа | ) (правая скобка) |

| Закрашена прямоугольная часть снизу | _ (подчеркивание) |

| Типовой образ | Описание | Символ |

| Закрашена прямоугольная область ориентированно посередине | 0 (нуль) |

| Закрашена меньшая часть образа | Любой символ, определяемый пользователем. Например, ‘ ‘ (пробел) |

| Закрашена диагональ, параллельная правому нижнему или левому верхнему краю | / (слэш) |

| Закрашена диагональ, параллельная левому нижнему или правому верхнему краю | \ (обратный слэш или флэш) |

| Закрашена прямоугольная область посередине, разделяющая область на две половины | | (вертикальная черта) |

Этого множества символов достаточно для точного отображения большинства образов. Необходимо принять следующее допущение: качество конечного результата определяется степенью схожести выходного текстового поля с входным образом, а не качеством отдельно взятого образа. Согласно данному допущению определенное множество символов является достаточной

Следовательно, обучающая выборка должна состоять из искаженных типовых образов. В итоге удалось собрать 770 образов (по 70 на каждый символ).

Проектирование

Проектирование ИНС.

Для реализации нейронной сети будем использовать язык c#. Задача может звучать следующим образом: Необходимо реализовать библиотеку для работы с нейронным сетями, позволяющее собирать многослойные сети по частям. Например, из слоев, нейронов. Библиотека должна обладать функционалом для реализации обучения при помощи алгоритма обратного распространения ошибки и обладать гибкостью для возможности расширения. Должен присутствовать набор стандартных “объектов”, которые необходимы для алгоритма обратного распространения ошибки, а также возможность при отсутствии нужных доопределять свои.

Так как мы будем использовать объектно-ориентированный подход, необходимо из описания задачи определить первичный сущности: НЕЙРОННАЯ СЕТЬ, МНОГОСЛОЙНАЯ СЕТЬ, СЛОЙ, НЕЙРОН, ОБУЧЕНИЕ, АЛГОРИТМ ОБРАТНОГО РАСПРОСТРАНЕНИЯ ОШИБКИ, ОБЪЕКТ.

Рисунок 3.1: Диаграмма предметной области

Под объектом понимается сущность, которая может настраиваться для конкретной сети, например, разные сети могут использовать разные генераторы для инициализации весовых коэффициентов.

Под генератором чисел понимается сущность, генерирующая псевдослучайные числа по определенному закону распределения и из определенного диапазона. Это нужно для гибкой возможности выбора инициализации весовых коэффициентов.

Под обучением понимается сущность, содержащая минимальный функционал для обучения сети.

Алгоритм ОРО (Обратного распространения ошибки) это пример алгоритма обучения.

Под образом понимается формат входных и выходных данных.

Все остальные сущности не нуждаются в комментариях.

На основании диаграммы предметной области можно построить диаграмму отношений.

Рисунок 3.2: Первая часть диаграммы отношений.

Интерфейс INeuroObject является базовым в иерархии. Присутствует для обеспечения возможности обобщения объектов и для возможности добавления новых общих для всех классов методов. Неявно унаследован от System.Object.

Интерфейс INeuralNetwork должен предоставлять методы расчета выходных значений по входным и запуска обучения.

Интерфейс ILayer служит для обобщения понятия “Слой”

Интерфейс ISingleLayer должен предоставлять метод расчета выходного значения по входного для конкретного слоя.

Интерфейс IMultiLayer должен предоставлять возможность получения объекта ISingleLayer по индексу

Все остальные классы на данной части диаграммы являются реализациями интерфейсов.

Рисунок 3.3: Вторая часть диаграммы отношений.

Интерфейс ILearn должен предоставлять метод запуска обучения. В отличие от INeuralNetwork обязует реализовать алгоритм, а не просто вызывать.

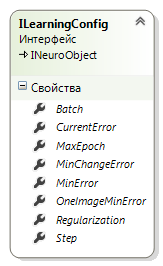

Интерфейс ILearningConfig должен предоставлять возможность получать данные, необходимые для обучения. Например, шаг обучения.

Интерфейс INeuroRandom должен давать возможность получать случайное число. Предполагается что пользователь реализует этот интерфейс, и добавляет такой генератор чисел, чтобы правильно инициализировать веса в соответствии с поставленной задачей. Например, может понадобится генератор, который генерирует числа из диапазона [-5,5] по нормальному закону распределения.

Все остальные классы в данной части диаграммы предназначены для реализации интерфейсов.

Рисунок 3.4: Третья часть диаграммы отношений.

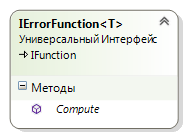

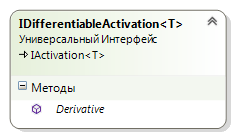

Интерфейс IFunction служит для обобщения понятия “функция”, а его производные интерфейсы, такие как IErrorFunction и IActivation предназначены для предоставления возможности расчета функции ошибки и активации соответственно.

IDifferentiableActivation служит для понятия дифференцируемой функции (важно,например, для алгоритма обратного распространения ошибки). Предоставляет метод для расчета производной.

IPartialErrorFunction служит для понятия функции ошибки, по текущему нейрону. Например, применим для вызова расчета ошибки на конкретном нейроне выходного слоя.

IFullErrorFunction не содержит реализаций. Присутствует если, возможно, пользователю понадобиться при вычислении ошибки текущего нейрона использовать данные других нейронов текущего слоя.

Все остальные классы в данной части диаграммы предназначены для реализации интерфейсов.

Многие классы и интерфейсы в иерархиях на схемах выше являются обобщенными. Это было сделано, если вдруг пользователю понадобиться хранить данные (например, веса, или входные данные) в любом типе. Так как моя цель была реализовать обучение по алгоритму обратного распространения ошибки, все реализации в качестве настраиваемого типа используют System.Double.

Диаграммы классов:

В данной курсовой работе использовалось 2 нейронные сети: для распознавания символов, и цвета.

Первая сеть на вход принимает 100 значений в диапазоне [0,1]. На выходе имеет 10 возможных вариантов значений. Выбирается тот, на котором в результате был накоплен больший импульс. В качестве архитектуры ИНС использовался многослойный персептрон с одним скрытым слоем. Скрытый слой имел 7 нейронов. В качестве функции активации для скрытого слоя использовался Сигмоид. Для выходного слоя Релу. В качестве оценки ошибки использовалось Среднеквадратичное расстояние Евклида. Для ускорения обучения был использован один из алгоритмов первоначальной инициализации настраиваемых параметров. Благодаря этому нейронная сеть обучилась быстрее. Для этого потребовалось 317 итераций. В итоге была достигнута ошибка 0,3265. Из тестовой выборки, которая составляла 15% от всех образов, было корректно распознано 93,5%. Всего было использовано 770 образов.

Пример сборки данной нейронной сети использую реализованную библиотеку:

ISingleLayer<double>[] layers = new ISingleLayer<double>[2];

layers[0] = new SingleLayer(100, 7, new Neuro.MLP.ActivateFunction.Sigmoid(), new Random());

layers[1] = new SingleLayer(7, 10, new Neuro.MLP.ActivateFunction.Relu(), new Random());

MultiLayer mLayer = new MultiLayer(layers);

DifferintiableLearningConfig config = new DifferintiableLearningConfig(new Neuro.MLP.ErrorFunction.HalfEuclid());

config.Step = 0.1;

config.OneImageMinError = 0.01;

config.MinError = 0.4;

config.MinChangeError = 0.0000001;

config.UseRandomShuffle = true;

config.MaxEpoch = 10000;

SimpleBackPropogation learn = new SimpleBackPropogation(config);

MultiLayerNeuralNetwork network = new MultiLayerNeuralNetwork(mLayer, learn);

network.DefaultInitialise();

network.Train(learningSet, testingSet);

network.Save("fsdf" +".json");

Вторая сеть использовала схожую архитектуру. Одним из отличий являлось то, что на выходе необходимо выдавать один из 16 возможных вариантов цвета. Следовательно, 16 нейронов в выходном слое. В скрытом было использовано 6 нейронов. На входе 24 нейрона, так как цвет храниться в представлении RGB без альфа канала, и для кодирования такого цвета необходимо 24 бита. Сеть принимает на вход битовое представление цвета, так как логически видимый цвет зависит от битового представления. Сеть была обучена за 561 итерацию с ошибкой 0,4543. Из тестовой выборки, которая составляла 15% от всех образов, было корректно распознано 97%. Всего было использовано 600 образов.

Пример сборки данной нейронной сети использую реализованную библиотеку:

ISingleLayer<double>[] layers = new ISingleLayer<double>[2];

layers[0] = new SingleLayer(24, 6, new Neuro.MLP.ActivateFunction.Sigmoid(), new Random());

layers[1] = new SingleLayer(6, 16, new Neuro.MLP.ActivateFunction.Relu(), new Random());

MultiLayer mLayer = new MultiLayer(layers);

DifferintiableLearningConfig config = new DifferintiableLearningConfig(new Neuro.MLP.ErrorFunction.HalfEuclid());

config.Step = 0.1;

config.OneImageMinError = 0.01;

config.MinError = 0.5;

config.MinChangeError = 0.0000001;

config.UseRandomShuffle = true;

config.MaxEpoch = 10000;

SimpleBackPropogation learn = new SimpleBackPropogation(config);

MultiLayerNeuralNetwork network = new MultiLayerNeuralNetwork(mLayer, learn);

network.DefaultInitialise();

network.Train(learningSet, testingSet);

network.Save("fsdf" +".json");

Пример распознавания символа (сеть на выход выдала символ ‘(‘):

Рисунок 3.5: Пример образа

Проектирование приложения

Из описания приложения, данного ранее можно выделить следующие сущности, реализуемые на уровне приложения: ИЗОБРАЖЕНИЕ, ТЕКСТ, КОДИРОВЩИК, ЭФФЕКТ.

Для того чтобы определить дополнительные сущности, необходимо попытаться их выделить из уже существующих.

1) ИЗОБРАЖЕНИЕ это объект класс System.Drawing.Bitmap. Изображение бывает ЭТАЛОННОЕ и ИЗМЕНЯЕМОЕ..

2) ТЕКСТ это результат преобразования изображения. В общем случае текст ЦВЕТНОЙ.

3) КОДИРОВЩИК – алгоритм или правило перевода информации из одного вида в другой. Необходимы КОДИРОВЩИК СИМВОЛОВ и КОДИРОВЩИК ЦВЕТА. для кодирования необходим ФРАГМЕНТ ИЗОБРАЖЕНИЯ. результат работы двух кодировщиков – ЦВЕТНАЯ БУКВА. В качестве кодировщика используется НЕЙРОННАЯ СЕТЬ.

4) ЭФФЕКТ – правило преобразования изображения, в результате которого меняется содержимое изображения, такое как цвет пикселя. Например, эффект удаления цвета.

5) ЭТАЛОННОЕ ИЗОБРАЖЕНИЕ присутствует для возможности восстановления первоначальной версии изображения, отменяя все применённые эффекты

6) Изменяемое изображение — это то изображение, с которым будет работать пользователь, и которое будет отображаться на форме.

7) ЦВЕТНОЙ ТЕКСТ – множество пар СИМВОЛ – ЦВЕТ

8) ФРАГМЕНТ ИЗОБРАЖЕНИЯ – область пикселей n x m, например, 10 x 10, который является порцией данных для кодировщика.

9) Цветная буква – одна пара символ – цвет.

10) кодировщик символов – правило перевода фрагмента изображения в символ

11) кодировщик цвета – правило определения наиболее типичного цвета для фрагмента изображения.

12)Нейронная сеть – Модель, на которой базируется работа кодировщиков.

13)Символ – объект из множества {' ','#','(',')','\','/','|','_','-','0',’\n’}, где ‘ ‘ и ‘#’ являются настраиваемыми параметрами.

14)ЦВЕТ – объект из множества {Черный, Синий, Зеленый, Голубой, Красный, Лиловый, Желтый,Белый,Серый, Светло синий, светло зеленый, Светло голубой, Светло красный, Светло лиловый, Светло желтый, Ярко белый}

Диаграмма прецедентов:

Описание потоков приложения:

1 – Предусловие: запуск программы.

Создание главного окна, его визуализация. Разблокирование прецедента 2. Инициализация данных.

2 – Предусловие: Нажатие кнопки открыть главном окне или перетаскивание изображения в клиентскую область окна

Создание и визуализация окна Открыть. Пользователь вводит путь к файлу. Система открывает файл. А если файл открыть не удалось, то 2.1.Загружает его в эталонное и измененное изображение. А если эталонное и измененное изображение уже присутствуют, то 2.2. Разблокировать прецеденты 3 – 7. Разрушение окна Открыть.

2.1 – завершить прецедент 2 и вывести оповещение об ошибке.

2.2 - Спросить хочет ли пользователь заменить изображение. Продолжить выполнение 2, а если не хочет, то завершить прецедент 2.

3 – Предусловие: Пользователь нажал на одну из кнопок на вкладке эффекты. Изменение измененного изображения согласно выбранному алгоритму.

4 – Предусловие: Пользователь нажал на кнопку сохранить или сохранить как. Создание и визуализация окна сохранить. Пользователь выбирает путь или путь выбирается по умолчанию. Сохранение измененного изображения в файл. Разрушение окна сохранить.

5 – Предусловие: Пользователь нажал кнопку GO.

Запуск алгоритма преобразования изображения. Создание и визуализация формы вывода. Вывод в созданную форму результата работы алгоритма.

6 – Предусловие: Пользователь выбирает команды Восстановить.

Установление измененному изображению состояния из эталонного изображения.

7 – Предусловие: пользователь нажал на крестик или на кнопку Выхода.

Освобождение ресурсов, выделенных приложением во время работы.

Из описания прецедентов уточним состав классов:

ГЛАВНОЕ ОКНО, ОКНО ОТКРЫТИЯ, ОКНО СОХРАНЕНИЯ, ОКНО ВЫВОДА.

Список форм и их описание.Прототипирование:

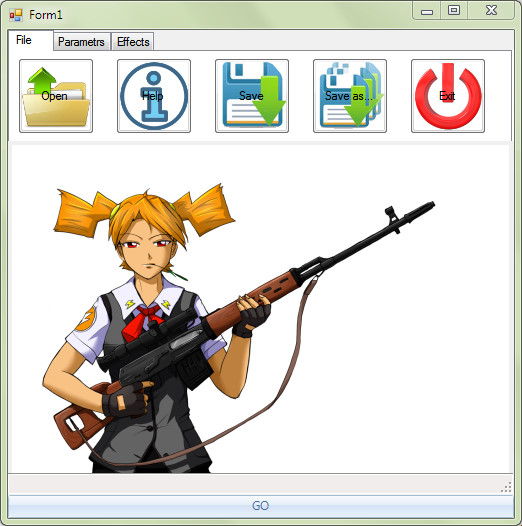

Главное окно – окно, загружаемое во время работы прецедента 1, посредством которого пользователь запускает прецеденты.(рис 3.6)

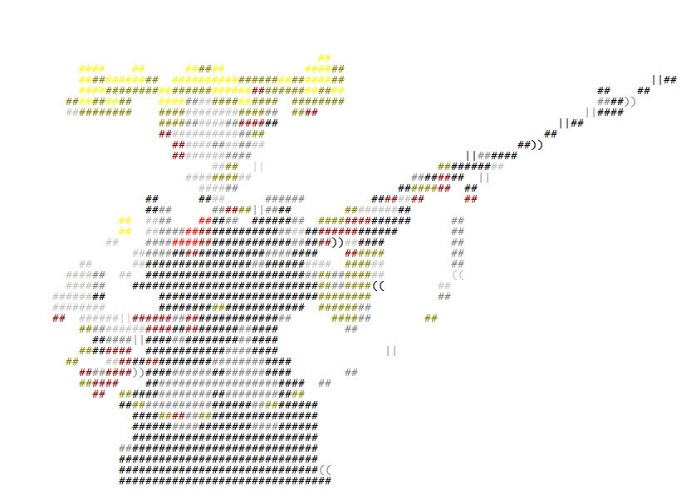

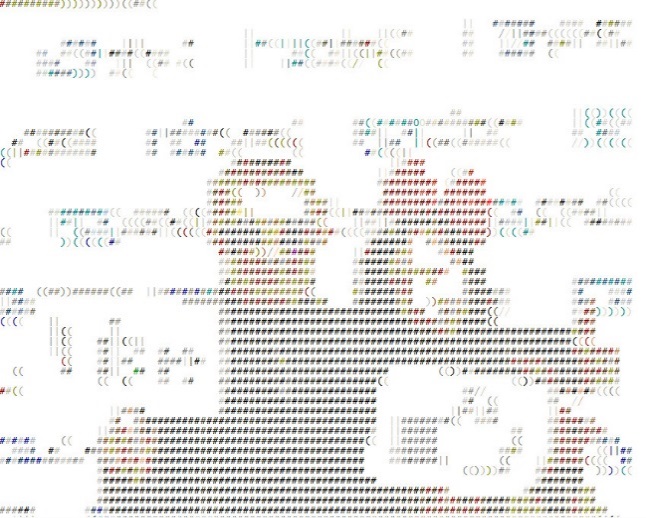

Окно Вывода – окно, загружаемое во время работы прецедента 5. Используется для вывода результата прецедента. Обеспечивает возможность копирования результата.(рис 3.7)

Окно Сохранения – окно, загружаемое во время работы прецедента 4. Используется для упрощения пользователю задачи выбора пути, по которому необходимо сохранить файл. В качестве окна используется типовое

Окно Открытия – окно, загружаемое во время работы прецедента 2. Используется для упрощения пользователю задачи выбора пути, по которому храниться файл. В качестве окна используется типовое

Рисунок 3.6: Главное окно

Рисунок 3.7: Окно вывода

Диаграмма состояний для приложения:

C1 Запуск приложения

С2 нажатие кнопки сохранить

С3 нажатие кнопки открыть

С4 нажатие кнопки выход или крестика

С5 Завершение работы прецедента 5

С6 Перетаскивание изображения на форму

С7 Нажатие любой функциональной клавиши на вкладке эффекты.

Д1 выполнение прецедента 1

Д2 выполнение прецедента 4

Д3 выполнение прецедента 2

Д4 выполнение прецедента 7

Д5 Создание и визуализация формы вывода. Вывод результата работы прецедента 5 в клиентскую область созданной формы.

Д6 выполнение прецедента 2.2 если эталонное изображение уже загружено, иначе выполнение прецедента 2

Д7 выполнение алгоритма наложения выбранного эффекта(прецедент 3 или прецедент 6 в случае выбора восстановить)

Уточненная диаграмма прецедентов:

Первичная диаграмма предметной области:

Необходимо упростить объекты предметной области путем отбора сущностей на роли классов, которые необходимо реализовать, уже реализованных классов, объектов.

В качестве сущности эффект можно использовать статический метод, тогда все эффекты будут инкапсулированы в статическом классе BitmapWorker.

В качестве сущности изображение можно использовать уже готовый класс System.Drawing.Bitmap

Тогда эталонное изображение, фрагмент изображения, изменяемое изображение будут объектами класса изображения. При этом эталонное изображение должно быть скрыто, а для предоставления информации возвращать копию, поэтому данный объект необходимо инкапсулировать в отдельный класс. Изменяемо изображение можно не хранить явно, а получать из свойства Image класс System.Windows.Forms.PictureBox, объект которого будет размещен на форме. В этом случае мы получим автоматическое обновление вида при изменении изменяемого изображения.

Объект кодировщик по сути является промежуточным звеном, поэтому его можно заменить нейронной сетью, тогда кодировщик изображений и кодировщик цвета — это объекты класс нейронная сеть. При этом кодировщик цвета должен расширять стандартный метод Compute класс MultiLayerNeyralNetwork, так как после получения данных с ИНС ему ещё предстоит их накопить и обработать.

Поскольку мы работаем только 16 цветами, вместо класса цвет можно использовать словарь, отображающий порядковый номер цвета в значение RGB или алгоритм сведения произвольного цвета к такому множеству. Вместо класса символ можно также использовать словарь, отображающий порядковый номер символа в char.

Цветной текст можно хранить как два массива – массив цветов и массив символов. При этом служебный символ ‘\n’ можно отождествлять с любым цветом, например, черным, или не хранить цвет, но в таком случае необходимо отдельно обрабатывать вывод ‘\n’. Часть данных, которая существует в виде отдельных объектов можно инкапсулировать в класс Model.

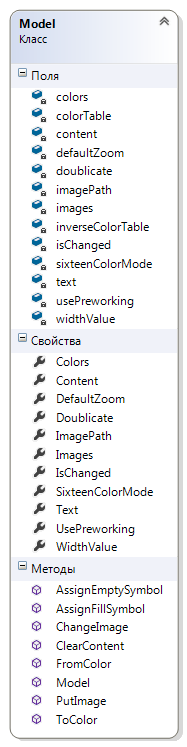

Диаграмма Классов:

ColorTo изменяет цветовую палитру выбранного изображение на заданную, например замены 24 битного цвета на 8 битный.

ColorToGray изменяет цветовую палитру на черно белую (один бит). Является частным, наиболее используемым в приложении случаем метода ColorTo

Сut возвращает фрагмент изображения заданного размера.

RecalculateSize Расчитывает новый размер, который был бы получен при масштабировании данного размера. То есть расчитывает ширину относительно длинны сохраняя пропорции

Resize Изменяет размер изображения

Round Пересчитывает размер таким образом, чтобы в нем вместились прямоугольники заданного размера, и при этом полностью заполнили площадь.

Zoom масштабирует изображение сохраняя пропорции

Colors возвращает массив цветов. Массив цветов хранит цвета, рассчитанные кодировщиком цвета.

DefaultZoom флаг, определяющий нужно ли изменять размер изображения перед кодированием. Некоторые изображения могут быть очень большого размера, и в результате кодирования текст не будет корректно перенесен(например мой монитор поддерживает ширину до 1600 пикселей, дальше строка текста не помещается на одной строке, и переноситься на следующую, что портит результат)

Doublicate флаг, определяющий нужно ли выводить символ в двух экземплярах. Это было добавлено потому что один символ в общем случае не квадратный, и некоторые изображения, если их символы не дублировать, выглядят несколько узкими.

Рисунок 3.7: Сравнение результата с применением дубликатов символов и без.

ImagePath хранит путь, по которому хранится изображение для обеспечения работоспособности сохранения по умолчанию.

Images предоставляет словарь <номер образа, символ>

IsChanged хранит информации о том, было ли модифицировано изображение. Необходимо для оповещения пользователя перед закрытием приложением не хочет ли он сохранить изображение если были изменения. За изменение считается применение эффекта и отмена изменений.

SixteenColorMode задает режим кодирования цвета. Включенный флаг включает кодирование при помощи нейронной сети. При отключенном флаге цвет будет браться случайный из данного фрагмента изображения, что может ухудшить качетсво результата и совместимость с windows консолью, но улучшит скорость работы.

Рисунок 3.8: Результат с кодированием цвета и без

Text возвращает массив символов. Массив символов хранит символы, рассчитанные кодировщиком символов.

UsePreworking включает предобработку. Предобработка удаляет цвет с фрагмента изображения перед тем, как подать его на кодировщик символов. Предобработка существенным образом не влияет на производительность. Предобработка позволяет фильтровать шум на образах, и улучшать качество кодирования, но в некоторых случаях из за предобработки теряется часть информации.

Рисунок 3.9: Результат с предобработкой и без.

WidthValue величина ширины, используемая для изменения размера изображения.

В отдельную категорию стоит отделить методы и свойства для работы с эталонным изображением. Важно предоставить такой интерфейс, чтобы он был минимальным, достаточным и не нарушал инкапсуляцию.

Content возвращает копию эталонного изображения, таким образом гарантируя, что невозможно изменять эталон.

ChangeImage Заменяет текущее эталонное изображение.Если текущее изображение отсутствует ничего не делает. Если замена произошла успешно, старое изображение утилизируется.

ClearContent очищает изображение. Если оно присутствует.

PutImage инициализирует изобраежение, если оно пустое, иначе ничего не делает. Таким образом мы за одну операцию можем или удалить, или установить, или заменить.

Методы AssignEmptySimbol и AssignFillSymbol предназначены для установки настраиваемых символов для кодирования. Напомню, что эти символы не несут особой смысловой нагрузки.

FromColor и ToColor позволяют конвертировать порядковый номер цвета в RGB представление, и наоборот.

Рисунок 3.10: Результат замены символа ‘#’ на ‘█’ и ‘ ‘ на ‘.’

Также отмечу что такая замена может послужить основой примитивного алгоритма восстановления изображения с потерями.

Блок схема алгоритма кодирования:

Тестирование

Для тестирования сконфигурируем приложение следующим образом:

Использовать кодирование цвета – Да

Масштабировать изображение – Да, на величину 600

Дублировать символы – Да

Использовать предобработку – Да

Символ закрашивания – ‘#’

Пустой символ – ‘ ‘

Начнем с самого простого случая – цветное изображение на белом фоне:

| Изображение | Результат |

|

|

|

|

|

|

На данном типе образов система работает корректно. Потеря информации почти не отсутствует.

Далее подадим изображение с фоном, который достаточно сильно отличается от целевого объекта

| Изображение | Результат |

|

|

|

|

|

|

Часть изображения, связанная с фоном теряется. Также теряются фрагменты, если они прилегают близко к фону. Это обусловлено в первую очередь недостатками алгоритма удаления цвета. Объект, который находиться в фокусе по-прежнему можно однозначно узнать.

Далее подадим изображения, относящиеся к природе. На данных примерах фон сливается с объектом, что максимально усложняет процесс распознавания.

| Изображение | Результат |

|

|

|

|

|

|

Система плохо кодирует такого рода изображения. Это связано, во-первых, с значительной потерей информации при удалении цвета, а во-вторых в том, что присутствует много объектов, и на общей картине и с большой вероятностью в один фрагмент попадают части разных, возможно даже не связанных объектов. В таком случае классифицировать что на изображении нам помогает по большому счету цвет.

Под конец приведем ещё несколько интересных частных случаев.

Рисунок 4.1: Входное изображение черно-белое

Рисунок 4.2: Входное изображение – Фото

Рисунок 4.3: Темное изображение на темном фоне

Рисунок 4.4: Светлое изображение на светлом фоне.

Рисунок 4.5: Изображения, содержащие большой объем информации.

Подведение итогов

В результате выполнения курсового была разработана и реализована библиотека для конструирования нейронных сетей. Особое внимание уделялось архитектуре, чтобы в итоге можно было собирать типовые нейронные сети, а при необходимости доопределять нужный функционал и интегрировать его с уже существующим. Но пришлось жертвовать быстродействием: так как повсеместно был использован динамический полиморфизм и наследование, код потерял в быстродействии и стал более раздутым, чем не расширяемые аналоги.

Также была разработана и реализована система для кодирования изображений в ascii art. Как было показано выше, система работает хорошо на тех изображениях, на которых, хорошо работает алгоритм удаления цвета. Если большая часть информации теряется после применения алгоритма удаления цвета, то и кодирование будет не качественным. Если изображение содержит большое количество объектов кодирование также работает не лучшим образом. В остальных же случаях был получен приемлемый результат: результат кодирования внешне похож на поданное изображение.

Применение ИНС в данной работе уменьшило недостатки описанных в начале работы методов перевода изображения в ascii art. Благодаря применения ИНС результат можно хранить в текстовых форматах, не поддерживающих цвет, передавать в компактном виде по сети, выводить на консоль, использовать как замены художника в советующем направлении искусства.

Стоит отметить что ручная работа, очевидно, качественнее результата работы системы. Однако ручная работа требует высоких затрат труда и времени, в то время как система кодирует очень быстро. Можно совместить работу программы и человека, что ускорит процесс выполнения работы художника.

Были показаны возможности восстановления изображения, хоть и с потерями, что дает предпосылки возможности алгоритма сжатия с потерями на основании описанной технологии.

Используемые источники:

1) Головко В.А. Нейронные сети: обучение, организация и применение

2) Павел Нестеров. Сборник статей про нейронные сети на habrahabr.

3) Муравьев Г.Л. Моделирование систем. Курс лекций. – БрГТУ, Брест, 2003.

Приложение А

[DataContract]

public class SimpleBackPropogation: DifferintiableLearningConfig, ILearn<double>

{

public SimpleBackPropogation(DifferintiableLearningConfig config)

: base(config)

{

}

/// <summary>

/// Обучения для отображения входных сигналов в выходные

/// </summary>

/// <param name="network"></param>

/// <param name="data"></param>

/// <param name="check"></param>

public void Train(INeuralNetwork<double> network, IList<NeuroImage<double>> data, IList<NeuroImage<double>> check)

{

//получаем удобную ссылку на сеть

MultiLayer net = network.Layer as MultiLayer;

this.CurrentEpoch = 0;

this.Succes = false;

double lastError = 0;

double[][] y = new double[data.Count][];

do

{

lastError = CurrentError;

//Перемешивание образов из выборки для данной эпохи

if (UseRandomShuffle == true)

{

RandomShuffle(ref data);

}

for (int curr = 0; curr < data.Count; curr++)

{

//считаем текущие выходы и импульсы на каждом нейроне

y[curr] = network.Compute(data[curr].Input);

//делаем оценку для текущего образа, и если погрешность допустима,идем

//на следующий образ. Данная модификация ускоряет процесс обучения и

//уменьшает среднеквадратичную ошибку

if (Compare(data[curr].Output, network.Layer[net.Layer.Length - 1].LastPulse) == true)

{

continue;

}

//считаем ошибку выходного слоя

OutputLayerError(data, net, curr);

//считаем ошибки скрытых слоев

HiddenlayerError(net);

//модифицируем веса и пороги

ModifyParametrs(data, net, curr);

}

//расчитываем ошибку

ComputeError(data, network,y);

//производим перерасчет на основании регуляризации

ComputeRegularizationError(data, net);

System.Console.WriteLine("Eposh #" + CurrentEpoch.ToString() +

" finished; current error is " + CurrentError.ToString()

);

CurrentEpoch++;

}

while (CurrentEpoch < MaxEpoch && CurrentError > MinError &&

Math.Abs(CurrentError - lastError) > MinChangeError);

ComputeRecognizeError(check, network);

}

private void ComputeRecognizeError(IList<NeuroImage<double>> check, INeuralNetwork<double> network)

{

int accept = 0;

double LastError = 0;

RecogniseError = 0;

for (int i = 0; i < check.Count; i++)

{

double[] realOutput = network.Compute(check[i].Input);

double[] result = new double[realOutput.Length];

LastError = ErrorFunction.Compute(check[i].Output, realOutput);

RecogniseError += LastError;

double max = realOutput.Max();

int index = realOutput.ToList().IndexOf(max);

result[index] = 1;

if (ArrayCompare(result, check[i].Output) == true)

{

accept++;

}

}

RecognisePercent = (double)accept / (double)check.Count;

RecogniseError /= 2;

}

public static bool ArrayCompare(double[] a, double[] b)

{

if (a.Length == b.Length)

{

for (int i = 0; i < a.Length; i++)

{

if (a[i]!= b[i]) { return false; };

}

return true;

}

return false;

}

/// <summary>

/// Учет регуляризации для расчета среднеквадратичной ошибки

/// </summary>

/// <param name="data">обучающая выборка</param>

/// <param name="net">сеть</param>

private void ComputeRegularizationError(IList<NeuroImage<double>> data, MultiLayer net)

{

if (Math.Abs(Regularization - 0d) > Double.Epsilon)

{

double reg = 0;

for (int layerIndex = 0; layerIndex < net.Layer.Length; layerIndex++)

{

for (int neuronIndex = 0; neuronIndex < net.Layer[layerIndex].Neuron.Length; neuronIndex++)

{

for (int weightIndex = 0; weightIndex < net.Layer[layerIndex].Neuron[neuronIndex].Weights.Length; weightIndex++)

{

reg += net.Layer[layerIndex].Neuron[neuronIndex].Weights[weightIndex] *

net.Layer[layerIndex].Neuron[neuronIndex].Weights[weightIndex];

}

}

}

CurrentError += Regularization * reg / (2 * data.Count);

}

}

/// <summary>

/// Расчет ошибки сети

/// </summary>

/// <param name="data">обучающая выборка</param>

/// <param name="network">сеть</param>

private void ComputeError(IList<NeuroImage<double>> data, INeuralNetwork<double> network, double[][] realOutput)

{

int accept = 0;

double LastError = 0;

CurrentError = 0;

for (int i = 0; i < data.Count; i++)

{

LastError = ErrorFunction.Compute(data[i].Output, realOutput[i]);

CurrentError += LastError;

}

TeachPercent = (double)accept / (double)data.Count;

CurrentError /= 2;

}

/// <summary>

/// Модификация настраиваемых параметров сети

/// </summary>

/// <param name="data">обучающая выборка</param>

/// <param name="net"><сеть/param>

/// <param name="curr">номер текущего образа</param>

private void ModifyParametrs(IList<NeuroImage<double>> data, MultiLayer net, int curr)

{

for (int i = net.Layer.Length - 1; i >= 0; i--)//по всем слоям в обратном порядке

{

for (int j = 0; j < net[i].Neuron.Length; j++)//для каждого нейрона

{

double temp = Step * net[i].Neuron[j].CurrentError

* net[i].Neuron[j].ActivationFunction.Derivative(net[i].Neuron[j].LastPulse);

net[i].Neuron[j].Offset += temp;

for (int k = 0; k < net[i].Neuron[j].Weights.Length; k++)//для каждого веса

{

if (i == 0)

{

net[i].Neuron[j].Weights[k] -= temp

* data[curr].Input[k] + Regularization * net[i].Neuron[j].Weights[k] / data.Count;

}

else

{

net[i].Neuron[j].Weights[k] -= temp

* net[i - 1].Neuron[k].LastState + Regularization * net[i].Neuron[j].Weights[k] / data.Count;

}

}

}

}

}

/// <summary>

/// Расчет ошибки скрытых слоев

/// </summary>

/// <param name="net">сеть</param>

private static void HiddenlayerError(MultiLayer net)

{

for (int k = net.Length - 2; k >= 0; k--)

{

//для каждого нейрона

for (int j = 0; j < net[k].Neuron.Length; j++)

{

net.Layer[k].Neuron[j].CurrentError = 0;

//сумма по всем нейронам следующего слоя

for (int i = 0; i < net[k + 1].Neuron.Length; i++)

{

//errorj = sum_for_i(errori * F'(Sj) * wij)

net.Layer[k].Neuron[j].CurrentError += net.Layer[k + 1].Neuron[i].CurrentError

* net.Layer[k + 1].Neuron[i].Weights[j] *

net.Layer[k + 1].Neuron[i].ActivationFunction.Derivative(net.Layer[k + 1].Neuron[i].LastPulse);

}

}

}

}

/// <summary>

/// Расчет ошибки выходного слоя

/// </summary>

/// <param name="data">обучающая выборка</param>

/// <param name="net">сеть</param>

/// <param name="curr">номер текущего образа</param>

///

private void OutputLayerError(IList<NeuroImage<double>> data, MultiLayer net, int curr)

{

int last = net.Layer.Length - 1;

//ошибка выходного слоя по каждому нейрону

for (int j = 0; j < net.Layer[last].Neuron.Length; j++)

{

net.Layer[last].Neuron[j].CurrentError = 0;

net.Layer[last].Neuron[j].CurrentError = ErrorFunction.Derivative(net.Layer[last].Neuron[j].LastState, data[curr].Output[j]);

}

}

private void RandomShuffle(ref IList<NeuroImage<double>> data)

{

Random gen = new Random();

int ind1, ind2;

for (int i = 0; i < data.Count; i++)

{

ind1 = gen.Next(0, data.Count);

ind2 = gen.Next(0, data.Count);

Swap(data, ind1, ind2);

}

}

private void Swap(IList<NeuroImage<double>> data, int ind1, int ind2)

{

NeuroImage<double> temp = data[ind1];

data[ind1] = data[ind2];

data[ind2] = temp;

}

private bool Compare(double[] p1, double[] p2)

{

double error = 0.0;

for (int i = 0; i < p1.Length; i++)

{

error += (p1[i] - p2[i]) * (p1[i] - p2[i]);

}

error /= 2;

if (error < OneImageMinError)

return true;

else

return false;

}

}

Приложение Б:

private void cloudButton1_Click(object sender, EventArgs e)

{

UpdateParametrizedSymbol();

toolStripProgressBar1.ProgressBar.Show();

Application.DoEvents();

source.Colors.Clear();

source.Text = null;

Task.Run(

() =>

{

Bitmap image = new Bitmap(pictureBox1.Image);

if (System.IO.File.Exists("SymbolRecognise.json") == false)

{

MessageBox.Show("Ошибка! Не найден файл SymbolRecognise.json");

return;

}

if (System.IO.File.Exists("ColorRecognise.json") == false)

{

MessageBox.Show("Ошибка! Не найден файл ColorRecognise.json");

return;

}

var SymbolNetwork = Neuro.MLP.MultiLayerNeuralNetwork.Load("SymbolRecognise.json");

var ColorNetwork = Neuro.MLP.MultiLayerNeuralNetwork.Load("ColorRecognise.json");

System.Drawing.Size blockSize = new Size(10, 10);

System.Drawing.Size metrics = Work.BitmapWorker.Round(image.Size, blockSize);

if (source.DefaultZoom == true)

{

metrics = Work.BitmapWorker.RecalculateSize(image.Size, source.WidthValue);

image = Work.BitmapWorker.Zoom(image, metrics);

}

if (image.Height % blockSize.Height!= 0)

{

metrics = Work.BitmapWorker.Round(image.Size, blockSize);

image = Work.BitmapWorker.Resize(image, metrics);

}

int blockPerLine = Convert.ToInt32((float)image.Width / (float)blockSize.Width);

int BlockAmount = Convert.ToInt32((float)image.Width / (float)blockSize.Width * (float)image.Height / (float)blockSize.Height);

double[] input = new double[100];

Bitmap ImageToEncoding;

if (source.UsePreworking == true)

ImageToEncoding = Work.BitmapWorker.ColorToGray(image);

else

ImageToEncoding = image;

Bitmap temp, encodeTemp;

Rectangle rect;

for (int i = 0; i < BlockAmount; i++)

{

rect = GetBlockRect(image, i);

temp = Work.BitmapWorker.Cut(image, rect);

encodeTemp = Work.BitmapWorker.Cut(ImageToEncoding, rect);

if (source.SixteenColorMode == true)

{

source.Colors.Add(ColorNetwork.Compute(temp));

}

else

{

source.Colors.Add(temp.GetPixel(5, 5));

}

input = CreateInput(encodeTemp);

var res = SymbolNetwork.Compute(input);

double max = res.Max();

int index = res.ToList().IndexOf(max);

source.Text += source.Images[index];

if (source.Doublicate)

{

source.Text += source.Images[index];

source.Colors.Add(source.Colors.Last());

}

if (i % blockPerLine == blockPerLine - 1)

source.Text += '\n';

}

Form2 dlg = new Form2(source.Text, source.Colors);

toolStripProgressBar1.ProgressBar.InvokeIfRequired(() =>

{

// Do anything you want with the control here

toolStripProgressBar1.ProgressBar.Hide();

});

dlg.ShowDialog();

}

);

}